When I last talked about the ragtag fleet of computers I generously call a "homelab" now, I had converted my gaming/VM machine back from Proxmox to Windows, where it remains (successfully) to this day.

For a while, though, I've been eyeing converting my NAS from TrueNAS Core to Scale. While I really like FreeBSD technically and philosophically, running Linux was very appealing for a number of reasons. Still, it was a high-risk operation, even though the actual process of migration looked almost impossibly easy. For some reason, I decided to take the plunge this week.

The Setup

Before going into the actual process, I'll describe the setup a bit. The machine in question is a Mac Pro 1,1: two Xeon 5150s, four traditional HDDs for storage, and a handful of M.2 drives. The machine itself is far, far too old to have NVMe on the motherboard, but it does have PCIe, so I got a couple adapter cards. The boot volume is a SATA M.2 disk on one of them, while I have some actual NVMe ones serving as cache/log devices in the ZFS pool. Also, though everything says that the maximum RAM capacity is 32 GB, I actually have 64 in there and it's worked perfectly.

It's a bit of a weird beast this way, but those old Mac Pros were built to last, and it's holding up.

Also, if you're not familiar with TrueNAS and its different variants, it's worth a bit of explanation. TrueNAS Core (née FreeNAS) is a FreeBSD-based NAS-focused OS. You primarily interact with it via a web-based GUI and its various features heavily revolve around the use of ZFS, while its app system uses FreeBSD jails and its VM system uses Bhyve. TrueNAS Scale is a related system, but based on Debian Linux instead of FreeBSD. It still uses ZFS, and its GUI is similar to Core, but it implements its apps and VMs differently (more on this in a bit). For NAS/file-share uses, there's actually less of a difference than you might think based on their different underlying OSes, but the distinctions come into play once you go beyond the basics.

The Conversion

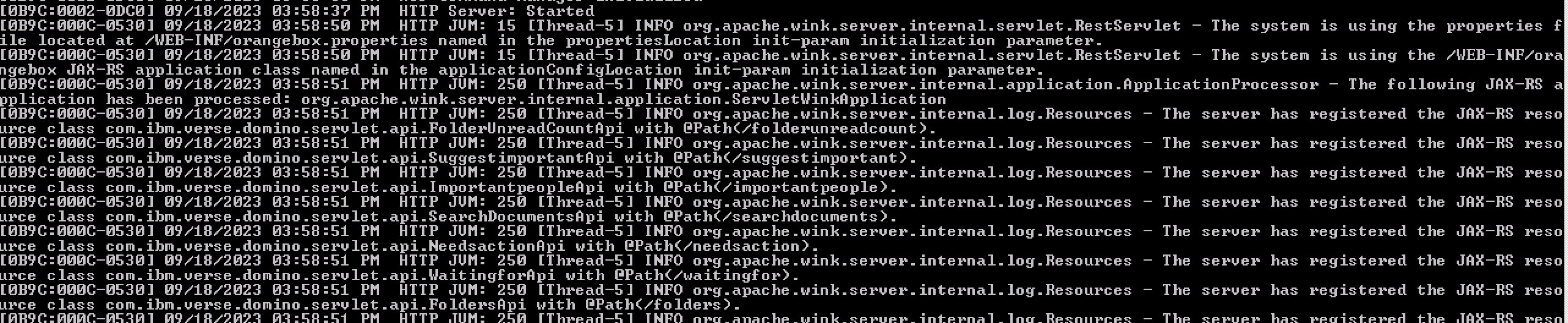

If anything, the above-linked documentation overstates the complexity of the operation. I didn't even need to go the "manual update" route: I went to the Update panel, switched from the TrueNAS Core train to the current non-beta TrueNAS Scale one, hit Update, and let it go. It took a long time, presumably due to the age of the machine, but it did its job and came back up on its own.

Well, mostly: for some reason, the actual data ZFS pool was sort of half-detached. The OS knew it was supposed to have a pool by its name, but didn't match it up to the existing disks. To fix this, I deleted the configuration for the pool (but did not delete the connected service configuration) and then went to Import Pool, where the real one existed. Once it was imported, everything lined back up without further issue.

Being basically a completely-different OS, there are a number of features that Core supports but Scale doesn't. Of that list, the only one I was using was the plugin/jail system, but I had whittled my use down to just Postgres (containing only discardable dev data) and Plex. These are both readily available in Scale's app system, and it was quick enough to get Plex re-set-up with the same library data.

Apps

As I mentioned, TrueNAS Core uses a custom-built "plugin" system sitting on top of the venerable FreeBSD jail capabilities. Those jails are similar in concept to things like Docker containers, and work very similarly in practice to the Linux Containers system I experienced with Proxmox.

TrueNAS Scale, for its part, uses Kubernetes, specifically by way of K3s, and provides its own convenient UI on top of it. Good thing it does provide this UI, too, since Kubernetes is a whole freaking thing, and I've up until this point stayed away from learning it. I guess my time has come, though. Kubernetes is distinct from Docker - while older versions used Docker as a runtime of sorts, this was always an implementation detail, and the system in use in current TrueNAS Scale is containerd.

Setting aside the conceptual complexity of Kubernetes, this distinction from Core is handy: while not being Docker, Kubernetes can consume Docker-compatible images and run them, and that ecosystem is huge. Additionally, while TrueNAS ships with a set of common app "charts" (Plex included), there's a community project named TrueCharts that adds definitions for tons and tons more.

Domino

That brings me to our beloved Domino. I had actually kind of gotten Domino running in a jail on TrueNAS Core, but it was much more an exercise in seeing if I could do it than anything useful: the installer didn't run, so I had to copy an installation from elsewhere, and the JVM wouldn't even load up without crashing. Neat to see, but I didn't keep it around.

The prospect on Scale is better, though. For one, it's actually Linux and thus doesn't need a binary-compatibility shim like FreeBSD has, and the container runtime meant I could presumably just use the normal image-building process. I could also run it in a VM, since the Linux hypervisor works on this machine while bhyve did not, but I figured I'd give the container path a shot.

Before I go any further, I'll give a huge caveat: while this works better than running it on FreeBSD, I wouldn't recommend actually doing what I've done for production. It'll presumably do what I want it to do here (be a local replica of all of my DBs without requiring a distinct VM), it's not ideal. For one, Domino plus Kubernetes is a weird mix: Kubernetes is all about building up and tearing down a swarm of containers dynamically, while Domino is much more of a single-server sort of thing. It works, certainly, but Kubernetes is always there to tempt you into doing things weird. Also, I know almost nothing about Kubernetes anyway, so don't take anything I say here as advice. It's good fun, though.

That said, on to the specifics!

Deploying the Container

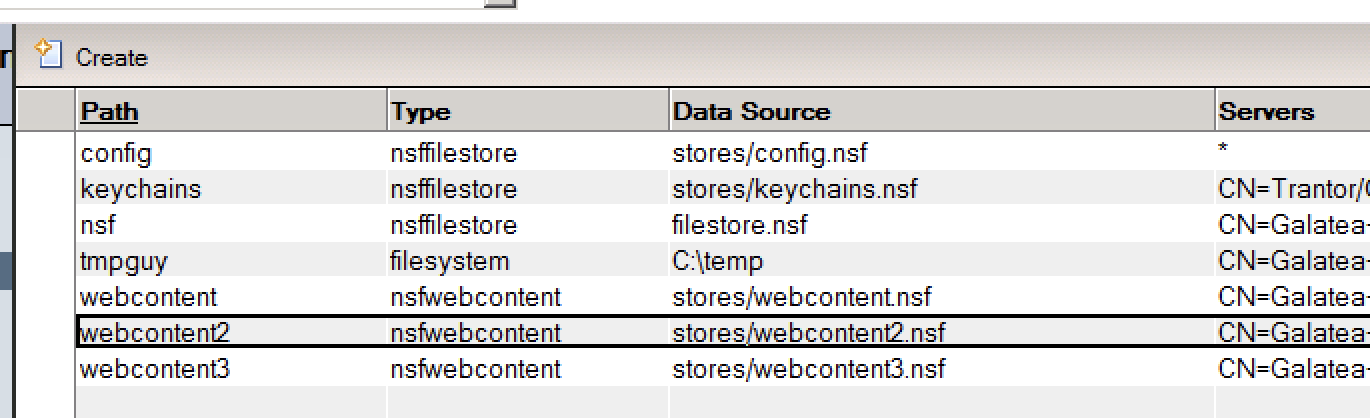

The way the TrueNAS app UI works, you can go to "Custom App" and configure your container by referencing a Docker image from a repository. I don't normally actually host a Docker registry, instead manually loading the image into the runtime. It might be possible to do that here, but I took the opportunity to set up a quick local-network-only one on my other machine, both because I figured it'd be neat to learn to do that and because I forgot about the Harbor-hosted option on that link.

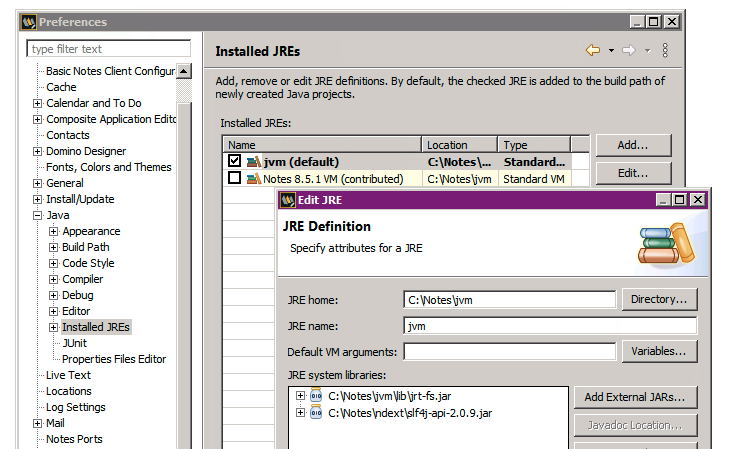

Since the local registry used HTTP and there's nowhere in the TrueNAS UI to tell it to not use HTTPS, I followed this suggestion to configure K3s to explicitly map it. With that in place, I was able to start pulling images from my registry.

The Domino Version

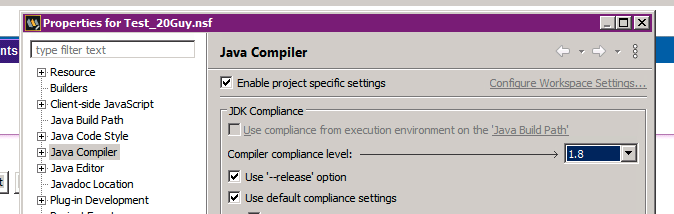

One quirk I quickly ran into was that I can't use Domino 14 on here. The reason for this isn't an OS problem, but rather a hardware limitation: the new glibc that Domino 14 uses requires the "x86-64-v2" microarchitecture level and the Xeon 5150 just doesn't have that by dint of pre-dating it by two years.

That's fine, though: I really just want this to house data, not app development, and 12.0.2 will do that with aplomb.

Volume Configuration

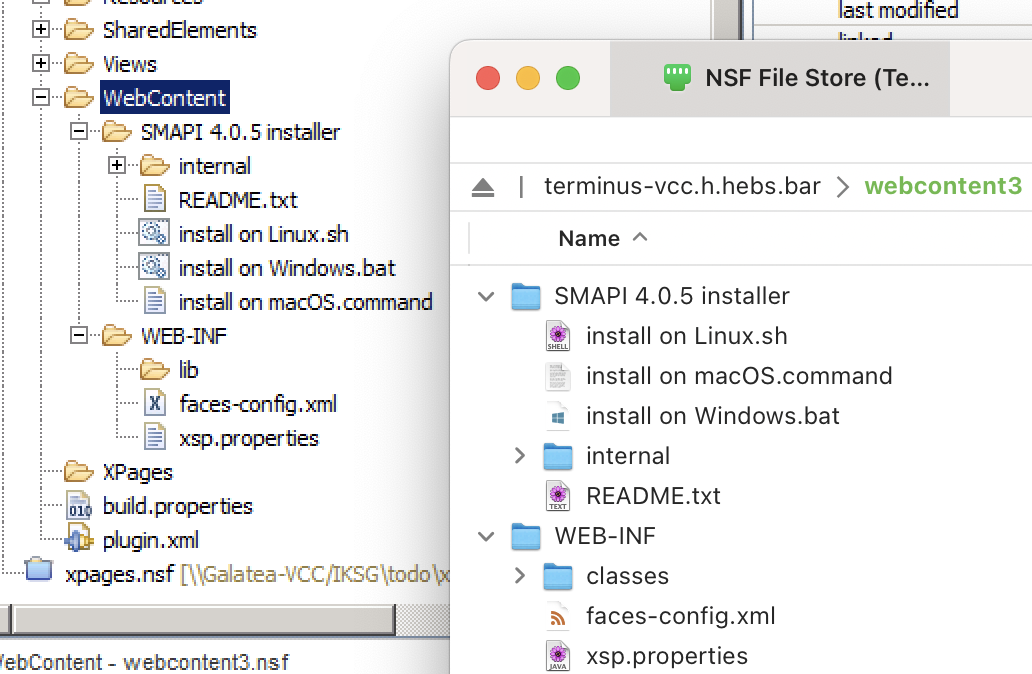

The way I usually set up a Domino container when using e.g. Docker Compose is that I define a handful of volumes to go with it: for the normal data dir, for DAOS, for the Transaction Log, and so forth. This is a bit of an affectation, I suppose, since I could also just define one volume for everything and it's not like I actually host these volumes elsewhere, but... I don't know, I like it. It keeps things disciplined.

Anyway, I originally set this up equivalently in the Custom App UI in TrueNAS, creating a "Volume" entry for each of these. However, I found that, for some reason, Domino didn't have write access to the newly-created volumes. Maybe this is due to the uid the container is built to use or something, but I worked around it by using Host Path Volumes instead. The net effect is the same, since they're in the same ZFS pool, and this actually makes it easier to peek at the data anyway, since it can be in the SMB share.

Once I did that and made sure the container user could modify the data, all was well. Mostly, anyway.

Transaction Logs, ZFS, and Sector Size

Once Domino got going, I noticed a minor problem: it would crash quickly, every time. Specifically, it crashed when it started preparing the transaction log directory. I eventually remembered running into the same problem on Proxmox at one point, and it brought me back to this blog post by Ted Hardenburgh. Long story short, my ZFS pool uses 4K sectors and Domino's transaction logs can't deal with that, at least in 12.0.2 and below.

This put me in a bit of a sticky spot, since the way to change this is to re-create the entire pool and I really didn't want to do that.

I came up with a workaround, though, in the form of making a little disk image and formatting it ext4. You can use a loop device to mount a file like a disk, so the process looks like this:

1

2

3

4 | dd if=/dev/zero of=tlog.img bs=1G count=1

sudo /sbin/losetup --find --show tlog.img

sudo mkfs.ext4 /dev/loop0

sudo mount /dev/loop0 /mnt/tlog

|

That makes a 1GB disk image, formats it ext4, and mounts it as "/mnt/tlog". This process defaults to 512-byte sectors, so I made a directory within it writable by the container user (more on this shortly), configured the Domino container to map the transaction log directory to that path, and all was well.

Normally, to get this mounted at boot, you'd likely put an entry in fstab. However, TrueNAS assumes control over system configuration files like that, and you shouldn't edit them directly. Instead, what I did was write a small script that does the losetup and mount lines above and added an entry in "System Settings" - "Advanced" - "Init/Shutdown Scripts" to run this at pre-init.

Networking

The next hurdle I wanted to get over was the networking side. You can map ports in apps in a similar way to what you'd do with Docker, but you have to map them to a port 9000 or above. That would be an annoying issue in general, but especially for NRPC. Fortunately, the app configuration allows you give the container its own IP address in the "Add external Interfaces" (sic) configuration section. Since the virtual MAC address changes each time the container is deployed, I gave it a static IP address matching a reservation I carved out on my DHCP server, pointed it to the DNS server, and all was well. All of Domino's open ports are available on that IP, and it's basically like a VM in that way.

Container User

Normally, containers in TrueNAS's app system run as the "apps" user, though this is configurable per-app. The way the Domino container launches, though, it runs as UID 1000, which is notes inside the container. Outside the container, on my setup, that ID maps to... my user account jesse.

Administration-wise, that's not exactly the best! In a less "for fun" situation, I'd change the container user or look into UID mapping as I've done with Docker in the past, but honestly it's fine here. This means it's easy for me to access and edit Domino data/config files over the share, and it made the volume mapping above work without incident. As long as no admins find out about this, it can be my secret shame.

Future Uses

So, at this point, the server is doing the jobs it was doing previously, plus acting as a nice extra replica server for Domino. It's positioned well now for me to do a lot of other tinkering.

For one, it'll be a good opportunity for me to finally learn about Kubernetes, which I've been dragging my feet on. I installed the Portainer chart from TrueCharts to give me a look into the K8s layer in a way that's less abstracted than the TrueNAS UI but more familiar and comfortable than the kubectl tool for me for now.

Additionally, since the hypervisor works on here, it'll be another good location for me to store utility VMs when I need them, rather than putting everything on the Windows machine (which has half as much RAM).

I could possibly use it to host servers for various games like Terraria, though I'm a bit wary of throwing such ancient processors at the task. We'll see about that.

In general, I want to try hosting more things from home when they're non-critical, and this will definitely give me the opportunity. It's also quite fun to tinker with, and that's the most important thing.